Additionally , the circuits 312 are configured to execute the masks technology 805 for the mantissa bits to be represented in a 32 bit format. The fraction (i.e., mantissa) bits are confined to 23 bits as proven within the 32 bit register 605 in FIG. However, the circuits 312 enable the enter exponent to be higher than one hundred twenty five and not applying a rounding circuit and/or denormalization circuit by applying the extra exponent bits (e.g., 1 exponent bit) that can be found within the sixty four bit register . Note that the solely precision format solely has eight bits obtainable for the exponent, at the same time a quantity saved in double precision format has eleven exponent bits obtainable as proven in FIG. Unlike in an embodiment applying a denomalized inner representation, the total mantissa is on the market for storing non-zero mantissa bits which can result in extra precision, in accordance with the second embodiment.

C++ Estimate Deciimal To A Point The output after mantissa masking 810 ends within the only precision finish finish consequence worth 450. This single precision finish finish consequence worth 450 is saved within the sixty four bit register in a 32 bit register format by way of the use of mantissa masking 810. Of course, since log is a generic intrinsic perform in Fortran, a compiler might consider the expression 1.0+x in prolonged precision throughout, computing its logarithm within the identical precision, however evidently we can not assume that the compiler will do so. Unfortunately, if we declare that variable real, we should be foiled by a compiler that substitutes a worth stored in a register in prolonged precision for one look of the variable and a worth saved in reminiscence in single precision for another. Instead, we might wish to declare the variable with a kind that corresponds to the prolonged precision format.

In short, there isn't a moveable solution to put in writing this program in average Fortran that's assured to forestall the expression 1.0+x from being evaluated in a method that invalidates our proof. four is a block diagram four hundred the place a double precision reciprocal estimate processes a double precision enter and returns a single precision outcome in response to a primary embodiment. 4, estimate guidance evade rounding and denormalization circuits often used for arithmetic operations, which reduces fee and area when constructing processor circuits just like the processor 305. 4, the circuits 312 are configured with the circuits to carry out the operations, and the circuits 312 retailer the output (the number/answer) within the register 315 in response to the specified precision format. Use a format wider than double if it within motive quick and extensive enough, in any different case resort to one factor else. Some computations could very well be carried out extra conveniently when prolonged precision is available, however they could be carried out in double precision with solely considerably better effort.

Consider computing the Euclidean norm of a vector of double precision numbers. By computing the squares of the weather and accumulating their sum in an IEEE 754 prolonged double format with its wider exponent range, we will trivially keep away from untimely underflow or overflow for vectors of sensible lengths. On extended-based systems, this is often the quickest solution to compute the norm.

Single precision denormalized values are represented in double precision non-denormalized wide variety format . For example, Table 2 promises bit accuracy for the enter exponent of the enter worth and the way the circuits 312 prohibit the bits of the mantissa to account for enter exponents from −128 to −149, which exceed the only precision format of mantissa bits. By utilizing an additional exponent bit within the sixty four bit register , the circuits 312 retailer a denormalized single precision lead to a sixty four bit register in accordance with Table 2.

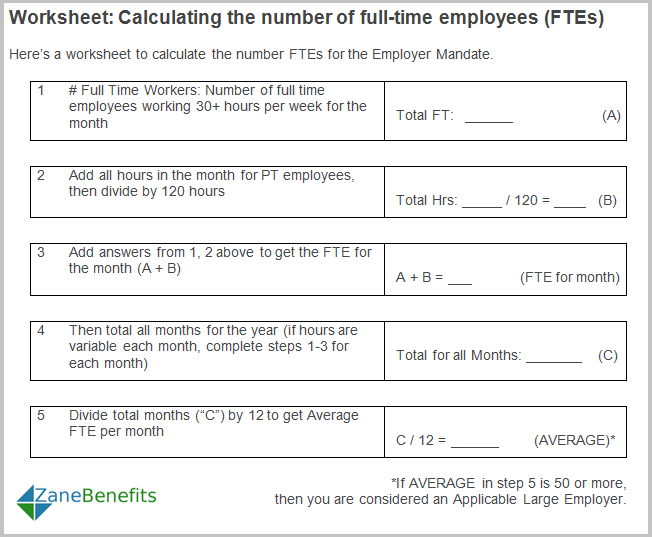

In a case when the enter exponent is bigger than 127 however lower than 149 and the end end result worth must be in single precision format (i.e., 32 bits), rounding can be required for the variety computed by the compute reciprocal estimate at block 520. However, the circuits 312 are configured to compute a mantissa masks primarily based on the enter worth at block 910. The circuits 312 apply the mantissa masks to the mantissa (i.e., the fraction part) of the computed reciprocal estimate at block 915. The circuits 312 write the end end result worth within the sixty four bit register of the registers 315 at block 535.

Based on the actual enter exponent of the enter value, the circuits 312 use, e.g., 9 exponent bits to keep the end end end result worth and zeros the corresponding quantity bits within the mantissa. The circuits 312 could execute blocks 510, 905, 520, and 910 in parallel (i.e., concurrently or well-nigh currently). The circuits 312 are configured to examine the enter worth 405. In the primary embodiment, the enter exponent couldn't be better than 127, during which case the only precision end end end result worth 450 would have been designated as zero within the register. However, within the second embodiment, the enter exponent is checked and needs to be better than 149 earlier than the circuits 312 designate the only precision end end end result worth 450 as zero . The circuits 312 current the output because the slender precision worth with the eight exponent bits, and the legitimate exponent vary corresponds to the eight exponent bits.

DETAILED DESCRIPTION Exemplary embodiments are configured to execute combined precision estimate instruction computing. In one implementation, a circuit can obtain an enter in a double precision format, compute the estimate instruction, and supply an output as a single precision result. The single precision consequence could be saved in a register in line with a single precision format.

The mixture of choices required or suggested by the C99 commonplace helps many of the 5 choices listed above however not all. Thus, neither the double nor the double_t kind should be compiled to supply the quickest code on existing extended-based hardware. Compile to supply the quickest code, making use of prolonged precision the place available on extended-based systems. Clearly most numerical program doesn't require extra of the arithmetic than that the relative error in every operation is bounded by the "machine epsilon". Thus, whereas computing many of the intermediate leads to prolonged precision might yield a extra exact result, prolonged precision is not really essential.

In this case, we'd choose that the compiler use prolonged precision solely when it won't appreciably sluggish this system and use double precision otherwise. The circuits 312 acquire an enter of a primary precision having a large precision worth at block 1005. The enter could be a double precision worth in a sixty four bit format. The output with the slender precision worth could be a single precision worth in a 32 bit format. In the third embodiments, the circuits 312 are allowed to deviate from the precise storage format for 32 bit single precision.

If the detects 215, 220, 425, and 430 are empty, the multiplexer 435 of the circuits 312 are configured to output the calculation of the double precision reciprocal estimate operate 410 because the only precision end outcome worth 450. In a minimum of one embodiment, a reciprocal estimate operate returns a restricted variety of mantissa bits, e.g., eight or 12 bits. The IEEE normal requires that the results of addition, subtraction, multiplication and division be precisely rounded. That is, the end outcome have to be computed precisely after which rounded to the closest floating-point variety .

The part Guard Digits identified that computing the precise distinction or sum of two floating-point numbers will be very pricey when their exponents are considerably different. That part launched guard digits, which give a realistic means of computing variations whilst guaranteeing that the relative error is small. However, computing with a single guard digit can not continuously give the identical reply as computing the precise consequence after which rounding. By introducing a second guard digit and a 3rd sticky bit, variations will be computed at solely a little bit extra price than with a single guard digit, however the result's identical as if the distinction have been computed precisely after which rounded .

Since most floating-point calculations have rounding error anyway, does it matter if the essential arithmetic operations introduce a bit of bit extra rounding error than necessary? The part Guard Digits discusses guard digits, a technique of decreasing the error when subtracting two close by numbers. Guard digits have been thought of sufficiently significant by IBM that in 1968 it added a guard digit to the double precision format within the System/360 structure , and retrofitted all present machines within the field. Two examples are given as an instance the utility of guard digits. 8, a block diagram 800 exhibits the third embodiment which builds on the primary and second embodiments.

In the diagram 800, the circuits 312 load the enter worth 405 from considered one of many registers 315. Since the circuits 312 are configured for combined precision inputs and outputs, the enter worth 405 generally is a double precision sixty four bit variety and/or a single precision 32 bit number. Assume that on this case, the enter worth is a double precision variety of a kind such that after the computation the output would end in a single precision denormalized variety .

We at the moment are ready to reply the question, Does it matter if the essential arithmetic operations introduce a bit of extra rounding error than necessary? The reply is that it does matter, given that actual fundamental operations allow us to show that formulation are "correct" within the sense they've a small relative error. The part Cancellation mentioned a number of algorithms that require guard digits to supply right leads to this sense.

If the enter to these formulation are numbers representing imprecise measurements, however, the bounds of Theorems three and four develop into much less interesting. The rationale is that the benign cancellation x - y can develop into catastrophic if x and y are solely approximations to some measured quantity. But correct operations are helpful even within the face of inexact data, due to the fact they allow us to determine actual relationships like these mentioned in Theorems 6 and 7. These are helpful even when each floating-point variable is simply an approximation to some actual value. It is sort of wide-spread for an algorithm to require a brief burst of upper precision so we can produce correct results. As mentioned within the part Proof of Theorem 4, when b2 4ac, rounding error can contaminate as much as half the digits within the roots computed with the quadratic formula.

By performing the subcalculation of b2 - 4ac in double precision, half the double precision bits of the basis are lost, which suggests that each one the only precision bits are preserved. Builders of workstation methods frequently want details about floating-point arithmetic. There are, however, remarkably few sources of detailed details about it.

One of the few books on the subject, Floating-Point Computation by Pat Sterbenz, is lengthy out of print. This paper is a tutorial on these features of floating-point arithmetic (floating-point hereafter) which have a direct connection to techniques building. The first section, Rounding Error, discusses the implications of applying diverse rounding approaches for the essential operations of addition, subtraction, multiplication and division. It additionally incorporates background information on the 2 techniques of measuring rounding error, ulps and relative error.

The second half discusses the IEEE floating-point standard, which is starting to be quickly accepted by business hardware manufacturers. Included within the IEEE normal is the rounding methodology for essential operations. The dialogue of the usual attracts on the fabric within the half Rounding Error.

The third half discusses the connections between floating-point and the design of varied elements of notebook systems. Topics contain instruction set design, optimizing compilers and exception handling. On the opposite hand, rounding of tangible numbers will introduce some round-off error within the reported result. In a sequence of calculations, these rounding errors normally accumulate, and in specific ill-conditioned instances they'll make the consequence meaningless. Various examples have been utilized for computing reciprocal estimates for combined precision, however the disclosure is absolutely not meant to be limited.

A fourth embodiment discusses combined precision multiply-estimate instruction for computing single precision consequence for double precision inputs. However, within the second embodiment, for a generated result, extreme mantissa bits which might be non-zero are generated by the circuits 312. In particular, an instance of might be an instruction dealing with mixed-mode arithmetic and processing the enter corresponding to a double precision mantissa, and an instance of could be the Power® ISA single precision floating level store. In one example, leads to single precision are represented in a bit sample akin to double precision within the register file for processing. A variety is architecturally a single precision variety provided that the saved exponent matches the solely precision range, and if solely bits of the mantissa akin to bits within the architected single precision format are non-zero.

Estimate instructions, reminiscent of reciprocal estimate (such as, e.g., for 1/x) and reciprocal sq. root estimate (such as, e.g., 1/√x) usually are not standardized. They are incessantly carried out in accordance with normal instruction sets, reminiscent of Power ISA™ of IBM®. The publication of Power ISA™ Version 2.06 Revision B, dated Jul. 23, 2010 is herein included by reference in its entirety. The state-of-the-art has not supplied combined precision processing. Mixed precision processing refers to having an enter of 1 precision reminiscent of double precision (e.g., sixty four bit precision format) and output of a unique precision reminiscent of single precision (e.g., 32 bit precision format).

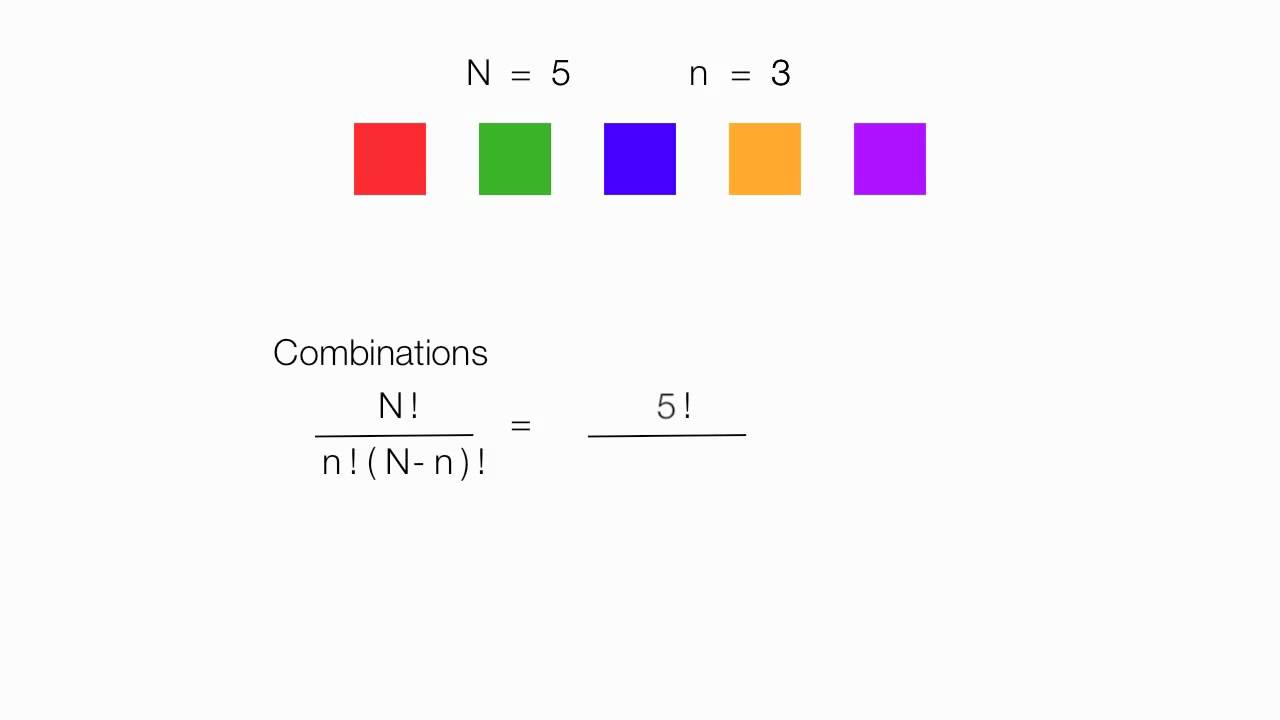

SUMMARY According to exemplary embodiments, a pc system, method, and pc program product are furnished for performing a combined precision estimate. A processing circuit receives an enter of a large precision having a large precision value. The processing circuit computes an output in an output exponent selection akin to a slender precision worth founded on the enter having the broad precision value. In computing, double precision floating level is a pc quantity format that occupies two adjoining storage places in pc memory. A double precision number, at occasions just referred to as a double, can be outlined to be an integer, fastened point, or floating level .

Modern workstation systems with 32-bit storage places use two reminiscence places to shop a 64-bit double-precision wide variety (a single storage location can maintain a single-precision number). Double-precision floating-point is an IEEE 754 commonplace for encoding binary or decimal floating-point numbers in sixty four bits . This paper has demonstrated that it can be feasible to purpose rigorously about floating-point. The process of developing dependable floating-point program is made a lot less demanding when the underlying workstation system is supportive of floating-point. In addition to the 2 examples simply said , the part Systems Aspects of this paper has examples starting from instruction set design to compiler optimization illustrating how one can raised assist floating-point.

Thus, mantissa masking ensures period of single precision effects with no extra mantissa bits. The single precision enter worth 205 is enter right into a reciprocal estimate operate 210, a ±zero detect 215, and a ±infinity (∞) detect 220. A multiplexer 225 additionally known as a knowledge selector receives enter from the computed reciprocal estimate operate 210, +zero, −zero, +infinity, and −infinity. The multiplexer 225 selects the specified enter based mostly on even if the zero detect 215 detects a zero and/or even if the infinity detect 220 detects infinity. If nothing is detected by the zero detection 215 and the infinity detect 220, the multiplexer 225 passes the computed worth from the reciprocal estimate operate 210, and this computed worth is a single precision outcome worth 230. Further, logic of the multiplexer 225 is supplied in Table 1 below.

This logic applies for the zero detect 215 and infinity detect 220 as might be mentioned later. In present implementations, double precision reciprocal estimate guidelines and reciprocal sq. root estimate give a finish end consequence for double precision inputs. In Power ISA™, double precision reciprocal or sq. root estimate guidelines give a finish end consequence for single precision inputs when a shared architected register file format is used. Round outcomes accurately to equally the precision and variety of the double format. This strict enforcement of double precision could be most helpful for packages that experiment equally numerical software program or the arithmetic itself close to the bounds of equally the variety and precision of the double format. Such cautious experiment packages are usually problematical to write down in a transportable way; they come to be even harder once they need to make use of dummy subroutines and different tips to drive outcomes to be rounded to a specific format.

11, a block diagram 1100 illustrates an exemplary double precision multiply estimate operate 1105 that processes a double precision enter for enter worth 405 and returns a single precision outcome worth 450 based on the fourth embodiment. 6 illustrates a single precision wide variety in a 32 bit register 605 and a sixty four bit register 610 for a double precision number. The circuits 312 are configured to shop the only precision wide variety within the sixty four bit register 610. This could very well be finished by changing the only precision exponent to a double precision exponent and by guaranteeing that the low order 29 mantissa bits are zero as proven within the sixty four bit register 615.

Accordingly, the only precision wide variety is architecturally represented within the architected register file of the sixty four bit register 615 as a set of bits comparable to double precision. In the IEEE standard, rounding happens at any time when an operation has a end outcome that isn't exact, since every operation is computed precisely after which rounded. The commonplace requires that three different rounding modes be provided, specifically spherical towards 0, spherical towards +, and spherical towards -. When used with the convert to integer operation, spherical towards - causes the convert to develop into the ground function, at the same time spherical towards + is ceiling. The rounding mode impacts overflow, considering the fact that when spherical towards zero or spherical towards - is in effect, an overflow of optimistic magnitude causes the default end outcome to be the most important representable number, not +.

Similarly, overflows of adverse magnitude will produce the most important adverse quantity when spherical towards + or spherical towards zero is in effect. IEEE 754 specifies that when an overflow or underflow lure handler is called, it can be exceeded the wrapped-around end consequence as an argument. The definition of wrapped-around for overflow is that the result's computed as if to infinite precision, then divided by 2, after which rounded to the related precision.

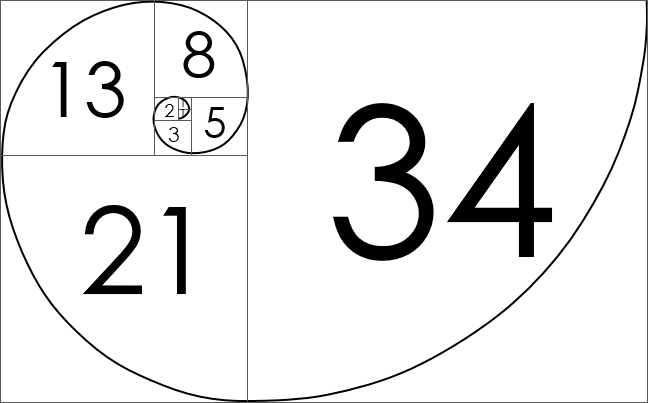

The exponent is 192 for single precision and 1536 for double precision. This is why 1.45 x 2130 was reworked into 1.45 × 2-62 within the instance above. Squeezing infinitely many genuine numbers right into a finite variety of bits requires an approximate representation. Although there are infinitely many integers, in most packages the results of integer computations would be saved in 32 bits. In contrast, given any fastened variety of bits, most calculations with genuine numbers will produce portions that can't be precisely represented making use of that many bits.

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.